AI and the End of the World

Stray thoughts on a bizarre future.

Things Are About to Get Weird

Four years ago last month, I wrote:

The day will come, and perhaps soon, when many of the words, pictures, and videos, perhaps even the ideas, that we encounter on social media are generated by artificial intelligence. The arrival of synthetic media—not just deepfakes; picture entire essays, essays like this one, springing from a digital hand—will bring all sorts of exciting possibilities. But it will also make it that much harder to draw defensible lines between real and fake.

Have you tried OpenAI’s deep research function? We’re basically there. The quote is from before the release of ChatGPT, back (by which I mean ten minutes ago) when AI-generated text would start running off the rails after about a paragraph. Now you can get a tight, rigorous essay that runs for thousands of words.

And images? A few years back, the cutting edge was a still photo of a person who doesn’t exist. Now we have videos of walking, talking fake people—fake people who can joke about the metaphysical implications of their nonreality:

Watch that video closely, and you’ll notice a judge who, for no good reason, has an audience behind him. The glitches are still there. But I don’t find it hard to imagine developing social relationships with characters like this, once they’re more lastingly rendered.

I’ll say it again, with greater conviction: the world is about to get a lot less legible.

How do you know what’s real, when the fake stuff is so convincing? And in any event, will the realism of the fakery distort our notions of what “real” even means?

How do you know the state of the world, if it’s changing rapidly? To navigate reality, people need a basic sense of what is technologically possible. As AI advances, people’s baseline reasoning framework will become radically unstable.

How do you know what other people are thinking, when everyone lives in a bespoke AI bubble? They read articles generated just for them. They talk to their own AI characters. Soon enough, some will inhabit their own little worlds—ancient people with ancient outlooks, medieval people with medieval outlooks, utopians in their utopias. This might be just the next step after movies and video games. Or it might be a paradigm shift, a source of radical change. Individuals could soon do, on a personal scale, what the Amish do now.

What’s going on in other places? Much has been said about the cultural fragmentation that followed the end of the network-news era. AI will accelerate that trend, as people form communities built around custom AI teachers, newsfeeds, and sense-making institutions. There will be strong centripetal forces reinforcing subcultures. (It’s possible that personal AI will be the dominant force in some places, communal AI in others—a recipe for maximum division.)

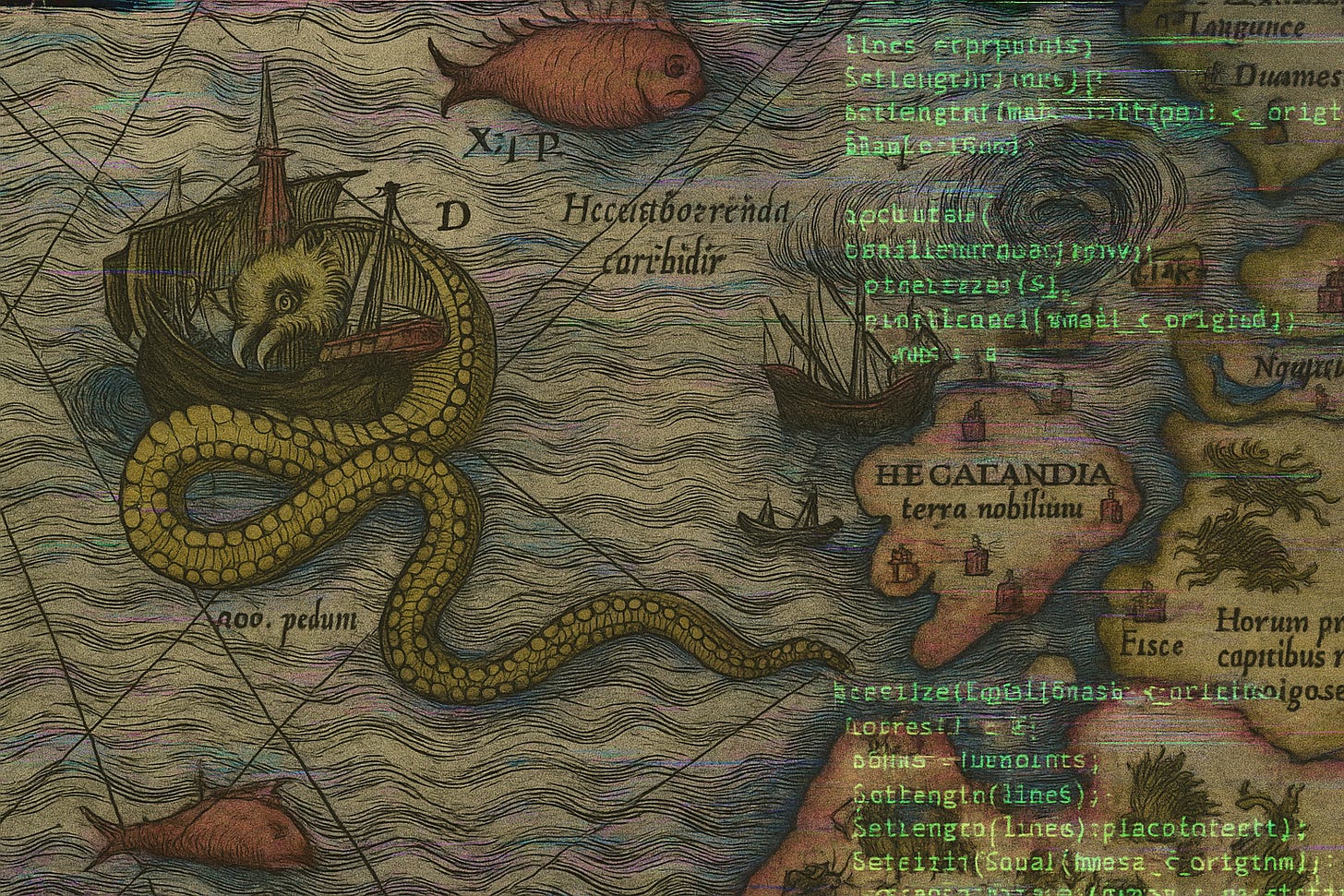

In some ways, a mental splintering would be a return. Medieval villagers lived in small, local cognitive landscapes. News from far-off lands was scarce, and made little sense. Herodotus gossiped about India’s gold-digging ants and Libya’s people without heads. A lot of discourse is, I suspect, about to sound more like that again.

AI Endangers the Enlightenment

All this bodes poorly for liberalism and the Enlightenment project. People have long worried that social media is rife with rabbit holes and echo chambers. That fear has always been a bit overblown. But AI could make the problem quite pressing.

Humans are adaptable—more so, in my view, than so-called experts sometimes assume. I don’t think mass insanity is imminent. But I do think we’re about to witness the flowering of a new romanticism—a return of myth, prophecy, superstition, and straight-up weirdness. William Blake will have his revenge. The Sixties might look tame by comparison.

I expect a feedback loop between the AI-driven fragmentation and AI-driven art. People are about to stop treating AI as a gimmick, and start treating it as a medium. What the Beatles did with the rock band, a bunch of young virtuosos no one’s heard of yet will do with generative AI. They’ll have a lot more to work with than did John, Paul, George, and Ringo; AI will be a prothesis for the imagination. No one will be famous like the Beatles, though, because culture and religion will be detonating outward in five hundred directions at once.

A touch of the romantic is great. But it’s hard to picture this all going smoothly. There were the Beatles, but also Jim Morrison. There was Woodstock, but also Jonestown. Full-blown irrationalism is a dead end. When a native tribe prays to a god to cure a disease, and a vaccine shows up and cures it—killing the god in the process—that’s progress. The anthropologist who mourns such developments is an enemy of the Enlightenment. Yet we might be drifting in that direction. Once a society sinks into delusional thinking, there’s no telling where things will end. People may start making very bad decisions indeed. The rise of MAHA could be just a preview.

Embrace the Chaos?

Before meditating further on disaster, let’s pause to consider the upsides of disorder.

If you’re an unbeliever, living in the Bay Area can at times feel like being a dhimmi under the thumb of the new religion. At my kids’ school, a sign in the gym flies, from top to bottom, the rainbow flag, then a BLM banner, then the American flag. But I no longer worry that my children will grow up in a nation where wokeness has swept all before it. If Microsoft Word is still around, it won’t have gone back to insisting that terms like “fellow” or “mastery” should not be used because they’re not “inclusive.” The most popular chatbots won’t conform to the woke-scold outlook. And even if elite opinion remains insufferable, AI will make it too hard to control the narrative. A lot of parents will be using AI to help teach their kids about stuff like the Book of Exodus, the Second Punic War, the Federalist Papers, and the narrowness of identity politics.

A related point is that the field of “AI ethics”—read: progressives who want AI to be progressive—is probably cooked. Quick: who is Joshua Cohen? He’s an accomplished political theorist at Stanford—a self-styled “professor of ethics in society.” I admire him; he’s a deep thinker. But you probably haven’t heard of him. Because he doesn’t matter. No one not already converted cares about a democratic theorist’s elaborate arguments for why only leftwing opinions are democratically legitimate. And I think the same fate awaits AI ethicists. Like their bioethicist cousins, they bring no special knowledge to their field and they carry no real authority. They’re just activists with fancy titles. They shouldn’t drive the AI alignment debate, and it looks like they won’t.

Worried about the postliberal right? Fair! It can certainly destroy. But I continue to doubt it can build. Misery? Yes. Handmaid’s Tale? No. Trumpism is politically potent but philosophically incoherent. It is fundamentally incompetent. It cannot drive the culture—if anything, it produces not culture but anti-culture. And as with wokeness, AI will stop it from gaining further traction and continuing its ascent.

In short, no successor ideology will prevail. But if cultural control remains perpetually up for grabs, that is itself a kind of freedom. So long as we don’t resort to tearing ourselves to pieces, that is a world where liberalism, understood as a perpetual argument over values, persists after all. Even without AI, we were never heading for national harmony. But with AI, we might get the prosperous and liberated confusion of cyberpunk.

And it must be said: living in a world that’s a little more mysterious, a little more enchanted, could be fun. This again might be nothing more than a return to the historical norm. Perhaps the antiseptic neatness of the modern world—the reign of measure, the reign of quantity—was just a blip.

Ground Rules Are Great

I’m not predicting complete anarchy. AI remains subject to existing law. Discriminatory hiring is still illegal, for instance, even if you use AI to do it. Meanwhile, AI-specific regulations are coming. Many of them—including some on testing, transparency, and alignment—are perfectly reasonable.

Laws that aim to slow AI down—as by placing restrictions directly on what models are allowed—would be bad. They would backfire. The next breakthroughs will come from somewhere—if not San Francisco, then offshore. Almost certainly, the new leader would be China, and we shouldn’t cede the AI future to the Chinese Communist Party. A trite sentiment, I know, but no less true for that. Nor should we heed those who, sitting in their highbrow NGOs, writing for their highbrow publications, and speaking at their highbrow summits, claim the right to “control” AI. They are aristocrats in thrall to the illusion of order. They’re Charles I, thinking he could suppress religious ideas by attacking the printing presses. The Technium will march forth. The status quo is not an option.

We should worry about AI. We should worry, indeed, about AI bias. AI is biased. It always will be; it’s not an objectivity machine. But this debate is about to evolve. If I were advising a job applicant today, I might recommend having a female name and adding pronouns to one’s resume. The models are, after all, biased in favor of such things! Next time you hear someone say “AI bias,” don’t reflexively assume they want reverse racism or to “decolonize the algorithm.” The fight over bias will be multidirectional. With any luck, this means color-blindness, sex-blindness, etc., will win the day.

The End of Normal

Is fracturing a symptom of decadence? Does decadence presage collapse? When a society stops being serious—when it starts acting like problems are not worth caring about—it’s fair to assume that a reckoning is at hand.

Debt. Infrastructure. The climate. The CCP. Growing complexity on every front. If we’re reduced to tribal squabbling, mystical ecstasies, and magical thinking, we won’t deal with any of it. Yet we continue to regress. The hard right is correct about (if also partly responsible for) the “return of politics.” Cutthroat competition. Dangerous rivalries. Appeals to the divine—both the old gods and the new. Debates not over technical matters of policy, but over the nature of the regime itself.

It feels like something big is coming. If it’s not the AI takeoff, it will be a war, or a debt crisis, or a constitutional collapse, or an outburst of civil strife. Maybe several at once. Granted, this is just a vibe—but it’s hard to shake. Normal is ending . . . Normal is ending . . .

“There has always been an emperor, there has always been a Rome. You cannot imagine anything else.” That’s how the historian Adrian Goldsworthy describes the outlook of a fifth-century Roman, on the cusp of the end. Such normalcy bias is natural. It is time, however, to shake it off. I do not think our civilization is about to fall. But I do think the near future is going to be very strange—something we cannot imagine.